Within the SingleTree project, the Photogrammetry and Remote Sensing Group at ETH Zürich is pushing the boundaries of forest digitalisation by laying the foundations for next-generation artificial intelligence models tailored to tree-level data. Over the past months, the team has focused on two key objectives: building the first version of a Forest Point Cloud Foundation Model, in close collaboration with NIBIO, and designing a new, more efficient neural network architecture to support future developments.

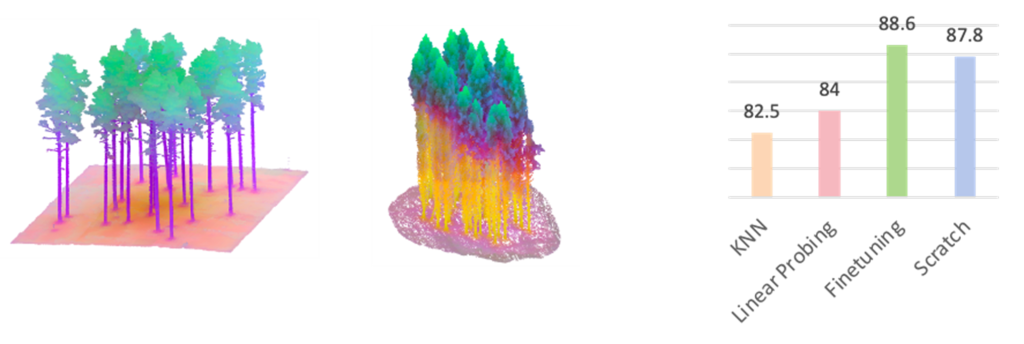

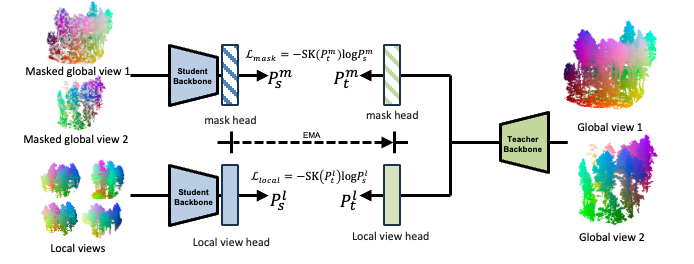

As an initial step toward large-scale, foundational AI for forestry, the team retrained a forest-specific version of the state-of-the-art SONATA architecture using purely self-supervised learning (Figure 1). This approach allows the model to learn directly from raw point cloud data, without the need for manually labelled training datasets—a critical advantage for scaling AI across diverse forest types and regions.

Figure 1: Self-supervised training of point cloud foundation model, via student-teacher distillation in feature space.

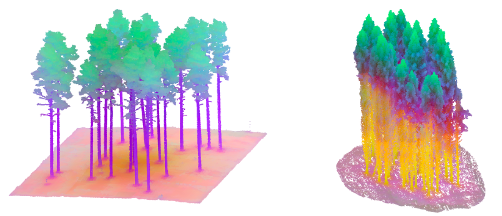

The resulting model already demonstrates strong performance on established forest scan datasets such as FOR-Instance. Straight out of the box, and without any additional training by the user, the model achieves a mean intersection-over-union (mIoU) of over 82% for ground, wood, and leaf segmentation. When fine-tuned for the segmentation task, performance rises above 88%, surpassing even specialised models trained from scratch (Figure 2) These early results confirm the potential of foundation models to generalise across forest conditions and applications.

Further refinements and a comprehensive evaluation of the model are currently underway in collaboration with NIBIO. The partners expect to submit the results for scientific publication during the coming winter, marking an important milestone for AI-driven forest analysis within SingleTree.

Alongside model training, the ETH Zürich team has also revisited the core architecture used to process point cloud data. The current implementation of the Foundation Model builds on Point Transformer V3 (PTv3). However, practical experimentation revealed that PTv3 is often heavier than necessary, demanding substantial memory and computational resources.

In response, the researchers conducted a deep investigation into the fundamental design principles of point cloud backbones. The outcome is LitePT, a new architecture that is lighter, more efficient, and yet more performant than its predecessor.

Together, these advances place SingleTree at the forefront of forest-focused AI research—demonstrating how foundation models and efficient architectures can unlock scalable, tree-level insights from complex 3D forest data.